Contact us

First Floor, 159 Victoria Pde

Collingwood, VIC 3066

(Google Map)

1300 727 952

or

+61 3 9910 4099

Data and content migration with govCMS

Salsa Digital recently worked with Acquia to migrate 5000+ pages of content "as-is" from a legacy CMS to govCMS (Drupal) for the Civil Aviation Safety Authority (CASA).

Not what I first expected

Salsa Digital was recently selected to work with Acquia on one of the first public govCMS platform migration from a proprietary CMS for the Civil Aviation Safety Authority (CASA).

One of the major deliverables for this project was migrating all of the original 5000+ pages of content as-is from a legacy CMS to govCMS (Drupal).

I was keen to put my many years of Drupal experience to the test with this govCMS platform migration project, although it was not entirely what I expected!

So you want to build a Drupal site on the govCMS platform? A note for the code ninjas out there, you may find that it’s not very exciting. No custom code allowed. Everything must be done via the Admin UI; no code access and no database access either.

And with good reason too, no custom code means reduced risk of security threats and greater use of proven, compliant and mature configuration modules.

So when I was assigned the migration task I felt somewhat uncertain. How would I migrate a site to Drupal without writing a line of code? In the end it was easier and less painful than I thought.

How migration works

Acquia uses the Acquia Cloud Site Factory (ACSF) environment for govCMS. However all work is done on the Acquia Cloud platform first before it’s moved to ACSF. No code from Acquia Cloud will go to ACSF, only the database will. When the site is ready, Acquia “forklift" the database to ACSF.

While on Acquia Cloud, yes, I have access to both code and database. This is the only chance to actually write code that works with Drupal. I could install the Migrate module (which is not included in the govCMS codebase) and write my own custom module for migrating data. At this point, migrating to govCMS is just like any other Drupal project.

My task was to migrate the legacy content from the legacy website to Drupal on govCMS. The legacy website was running on an unsupported Netcat CMS. I wasn’t too sure about its underlying platform (it seems to be powered by a CGI-based application on Windows), but its database is Interbase/Firebird. I didn't have direct access to the legacy system but I was given an SQL dump and an archive, which included a few GB of assets.

The database dump was in Firebird SQL syntax and I didn’t really want to install Firebird, however I managed to convert the Firebird data to MySQL. Thankfully the database structure was quite straightforward and the conversion was done relatively easily with regex search-and-replace. A few hundred megabyte database wasn’t an issue for content migration to Drupal.

Get familiar with the source data

I started by identifying how each piece of data was stored in the legacy database. Then I discovered some challenges:

-

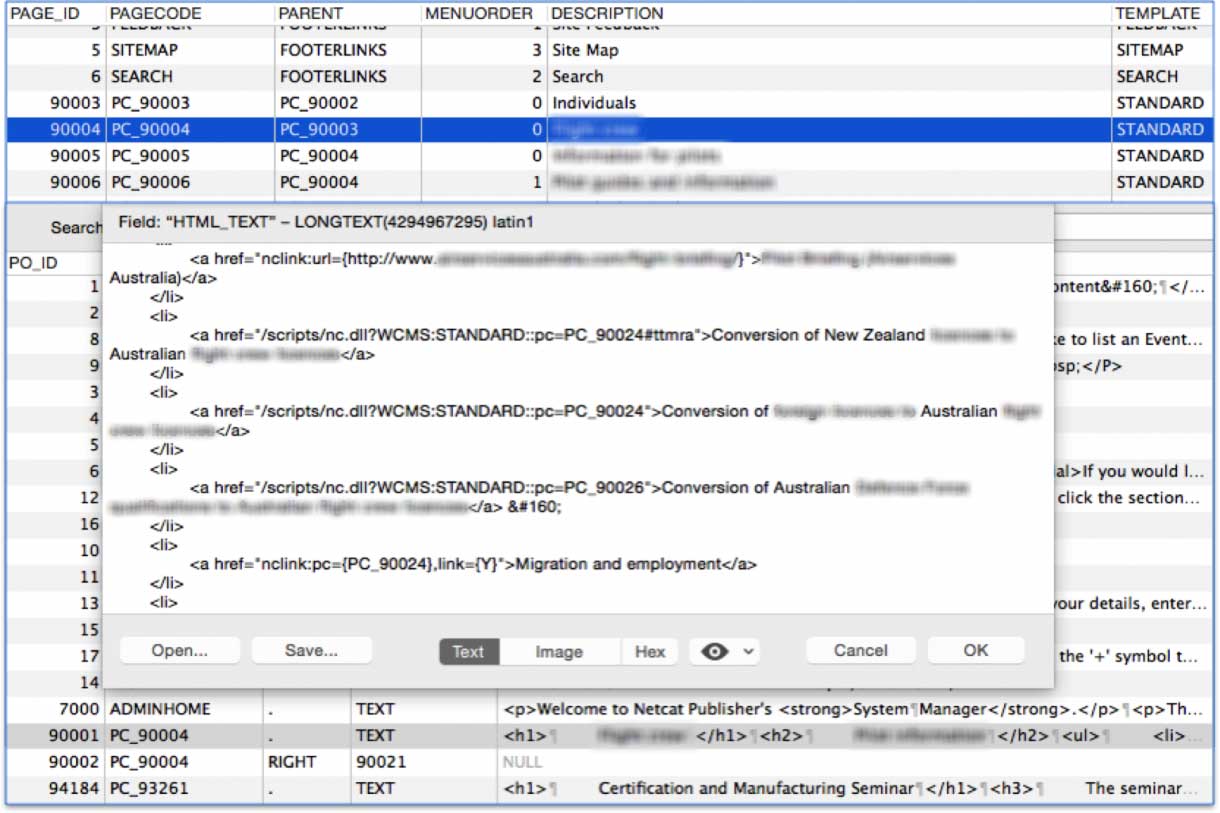

The legacy content was not always consistent. The main content of the legacy system was captured in only two tables PAGEHEAD and PAGEOBJS. The PAGEHEAD itself not only contained page metadata (such as title, timestamp, publishing status, etc.) but also hierarchy and menu settings. The PAGEOBJS held the body content of the pages. However not every page had body content. Some pages were just a link to an ASP page (yes ASP). I didn't know how these links were managed in the legacy system. Some pages were marked as unpublished in the database but publicly accessible on the live site. There were many inconsistencies, which meant going back and forth for clarification from the client.

-

The content was not always in HTML. There were special tokens processed by Netcat when rendering the page. As there is no custom code on ACSF to render those tokens, they needed to be converted to a Drupal-compatible format during the migration. Some of them were converted to links, others were converted to Media tokens. The conversion was mainly done by using preg_match_replace() with fairly complicated regular expressions. Anything that couldn't be converted was commented out in HTML.

Figure 1: PAGEHEAD and PAGEOBJ tables

-

Character encoding was also a challenge. I couldn’t rely on my understanding around ANSI and ISO-8859-1 and I’m still unsure about the charset used in the legacy system. I had to write a function to map some weird characters to the correct ones, mostly quotes, apostrophes, dashes and hyphens.

-

The legacy hierarchy was migrated to Drupal via entity reference fields. That means sometimes during the migration a node had references to something that didn't yet exist. It's not a chicken and egg thing because the Migration module already has built-in support with stub nodes.

-

The existing menu structure with up to 500+ items also needed to be retained during the migration. I couldn't think of any solution better than implementing it with the postImport() method of the Migration class. It delivered the desired outcome, but unfortunately made incremental migration somewhat impossible.

-

All migrated content was to have new simple and search engine friendly URLs, however the existing complex URLs still needed to work. I had created a bunch of redirects for each node in the complete() function to retain existing URLs.

Discrete migrations

One migration can only bring one set of source data across to Drupal content. The legacy system had more than one type of content. I had to write several migration classes to migrate them to Drupal content types. Below are some distinct migrations.

-

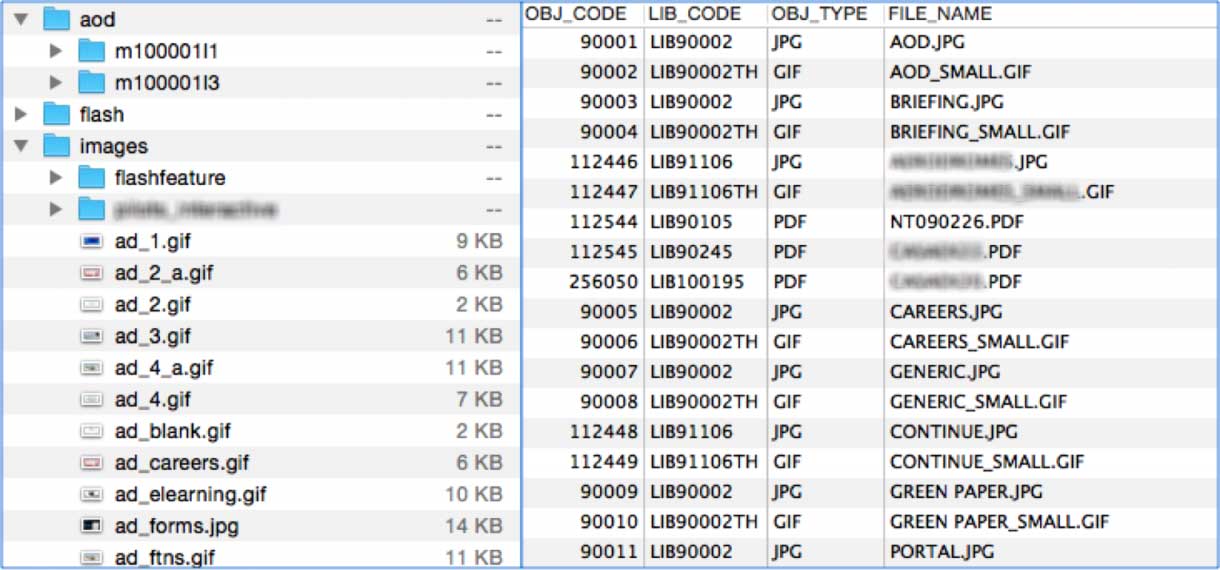

Assets - all images and documents were tracked in a few different tables in the legacy database, and referenced in the page content via Netcat special tokens. The Migration class converted those assets to File Entities in Drupal.

Figure 2: Legacy assets and their database records

-

Pages - as illustrated above there were several challenges with page content making the Migration class quite heavy with the overridden prepareRow(), prepare(), complete() and postImport() methods.

-

CSV - not all legacy content was stored in the database. The legacy system also rendered many pages using static CSV files. Here comes the job for Feeds and Feeds Tamper modules, which are already included in the codebase of govCMS. There were a few simple CSV migrations done via the Feeds module instead of writing a Migration class.

Performance considerations

The amount of data to be migrated was not massive but performance was still one of my key considerations. My Migration class initially consumed more than 500MB of memory when migrating about 5000+ pages. The heavy part was accounted for by building the menu items based on entity references. Resetting the entity cache when calling node_load() in my menu-building function reduced the memory footprint down to just a half. And I also figured out that by disabling the Search API module the migration time would be significantly reduced.

Go-live and post go-live

After the client had approved the migrated content on Acquia Cloud, the database was forklifted to ACSF and the new site went live. But my work didn't stop there. Within the first few weeks there were requests from the client stemming from automated processes on migrated content.

While ACSF doesn't allow any code to be uploaded to its platform it does provide drush access. This powerful tool helped me greatly, although it still needs some fine-tuning. The drush ev command can't run complex code, and a code snippet couldn't be uploaded to ACSF for drush scr. I was scratching my head until I remembered a very simple (and somewhat suspicious) solution: drush ev "eval(base64_decode('PD9waHAKCi8qK...'));". Does it look familiar (and again, suspicious)?

Conclusion

I was honoured to be involved in building the very first govCMS website. And working with govCMS was a unique experience in my Drupal career.

However, building a website without writing code is still somewhat unnerving for me as a developer. Migrating and theming were the only chances I had to write Drupal code. Did this mean it was mundane? Not at all. More restrictions meant I had to rise to the challenge and find new and more creative ways to deliver outcomes. Thanks govCMS.